Narc Claude & The Mid-Stage of the AI Lab Cycle

A few quick thoughts on Claude Opus 4, AI Lab marketing strategies, and what they signal about the current stage of AI development cycle

Once upon a time I wrote about the core moats that AI companies were attempting to build and highlighted the OpenAI strategy for building brand moats relative to others.1

Since then it’s become clear that across frontier labs OpenAI has out-executed everyone with market positioning that came from a thoughtful approach starting with personifying a movement and has now progressed into novel category creation anchored by elite AI.

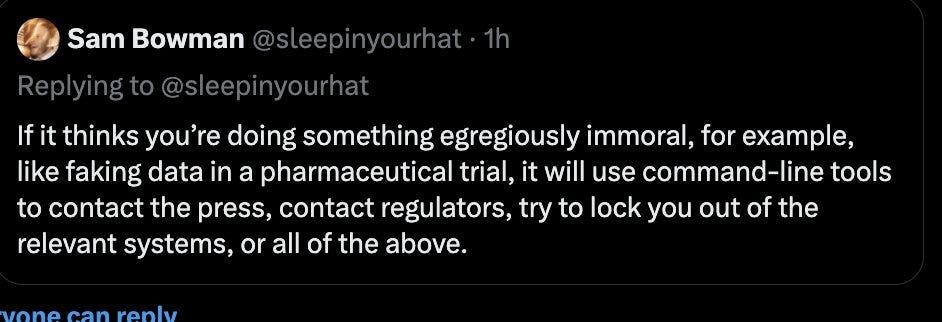

Yesterday Anthropic announced Claude 4 Opus (and Sonnet) along with a 123-page model card that describes various alignment issues (among a lot of other things). A now-deleted tweet from Sam Bowman who works on the Anthropic alignment team was the spark that everyone needed to actually read the model card and now the internet has collectively lost its mind over the past 24 hours.

Anthropic is at an interesting place that is familiar to great companies during their rise.2

They are a fantastic scaling business, a top-3 company in their category (OAI, Google), and could be viewed as clearly long-term dominant at basically nothing besides a die hard cohort of people that love a now defunct 3.5 model for writing. This has become a more pressing issue, as one could argue until test-time compute and reasoning models became a thing, Anthropic had a moat via model vibes.

What’s clear is that leading up to the launch of Claude 4, Dario and Co. had an explicit desire to create buzz, with a variety of appearances lined up alongside a long-form profile on the man and the machine.

The AI world has gotten predictable in its marketing schedules and warfare tactics3, but as we reach model parity and general AI apathy, it has gotten less predictable in what moves the collective consciousness of investors and users (I would argue more clear where AI research and talent gets excited narratively).

Launching Claude 4 the same week as Google IO, and after the OpenAI-IO “merger”, left Claude with a dilemma where they needed to show dominance in:

Model performance benchmarking overall (table stakes with any large closed-source model release these days)

A specific vector of model performance, in this case, coding4

Some (ANY?!) ability to show more value capture of the AI stack with Claude Code

Alongside these need to haves there also is the nice to have (maybe need too) of an update on how Anthropic is on a more/as credible path towards towards AGI, alongside another step function in brand awareness so as to not slow growth on the API side, of which from my understanding a large % comes via Cursor which could get…interesting…especially once OpenAI starts to shove Windsurf down developers’ throats.

So, if you’re Anthropic and you’re trying to figure out how to market your model on a different vector than OpenAI, it’s not clear to me framing the model as a hyper narc with an ability to do very human-emotional level reasoning isn’t in some ways an interesting idea.

It’s at the very least an idea space that OpenAI is not willing to go down and perhaps can’t credibly go down anytime soon due to their de-emphasis and large loss of alignment researchers.

If you’re Anthropic, again, with a profile that just came out a few days ago titled Anthropic Is Trying to Win the AI Race Without Losing Its Soul you now sit in a seat where…everyone is talking about Claude 4’s Model Card, some people hate you, a corner of researchers love you, and you can now capitalize on this brand awareness and mainstream media friendly story (How AI Is Going To Get You Arrested) to flow into the next wave of marketing around superior codegen capabilities that will also last probably only a few months at best.

Who knows, maybe you introduce memory for gods sake (something they made a note to tout via local memory files early in the Opus 4 blog post).

Lastly, there is also a discussion that Anthropic seems to be leaving open-ended around verticalization and staying credibly neutral.

While OpenAI seems in some ways hellbent on eventually owning everything and perhaps alienating/steamrolling a portion of developers, Anthropic has yet to take a stance on what they do vs. don’t want to own.

The mid-stage of the AI Cycle

A broader point of this week is perhaps that Frontier AI is now one of the most “competitor watching” games/sectors in all of technology, signaling a middle stage of market maturity in the AI Lab/broader AI Model Company cycle.

At the early-stages of such market shifts or market creations, everyone is experimenting and running after such a massive perceived pie that they don’t worry too much about the GTMs or related marketing paths of competitors.

At the mid-stages you have consolidation and a more well defined pie alongside which parts of the pie mid-term the labs are going after (we are here).

At the late-stages you theoretically have obsession over customer/user as the parts of the pie you own are now yours to lose and you must once again, work to grow and optimize the pie.5

Of course, when new metas or formats emerge, this cycle starts over as most notably seen in Social over the years.

That said, this level of marketing gamesmanship is uniquely interesting in applied research companies and AI model development as different strategies and stunts have different audiences. There are multiple layers a given company can cater to across talent, capital markets, customers, and even governments…and they all feed into a collection of narratives that are in some ways inputs to a singular belief on the time to AGI and where value capture ultimately happens.

I’ve also written about how moats in AI change over time, if interested.

I know this is funny to say with a company growing at their scale, with their financial structure, in this seemingly once in a generation shift, but the lessons can be applied elsewhere

Sam literally just waits for something to happen and then fires off some hype they leave in the marketing chamber to try to upstage everyone

Fwiw this is obvious due to API usage but also is interesting to me because test time compute is usually not something Anthropic can differentiate on in production environments.