5 things on my mind - by Michael Dempsey

This email spawns from this thread. These will likely evolve but the process will remain the same: Clear out email, filter down to 5 thoughts, send an email. As you'll see, some are random, most are unfiltered or poorly edited. Either way, let me know what you like, don't like, or want to dig into more.

5 Things

1) I want my AI self as a personal advisor

There have been countless stories about those that are taking the data exhaust that we put out on a daily basis in text messages, emails, chat logs, etc. and creating an AI version of a given person. I'm long AI friends and think this comes at scale in some usable form in the next few years, but when the technology improves even further, I'm really interested in the idea of my AI self as an emotionally normalized advisor. I use the term normalized because if my AI self is created from my real self's data exhaust, it will implicitly have some emotional bias. In times where emotions are running high, it feels valuable to hear how my less-emotionally charged self would act in a given situation. And while we often have our closest friends/family/confidants to rely on in these times, as we've seen across so many industries, interacting with a bot could remove any emotional issues that lead to under-sharing the full situation. I also would wager after some period of time, it'll be a lot harder to proactively tell your AI self that has all non-voice primary data (theoretically) with regards to the situation that they don't understand vs. your friend who only has pieces of information.

2) We're in a window where algorithmically-created products are interesting because of the process of creation.

At the 18:30 mark of this conversation Vijay and I recorded we talk about how something is interesting just because it is created by a machine and has edges/is slightly off. We recently saw the first large-scale implementation of this with a GAN painting which sold at auction for $432k. Outside of this I think there are are multiple opportunities to experiment in this space, with the restricting element being that these generative goods must make their way into the real world, and not just be digital assets. A few examples:

Robbie Barrat (who is controversially responsible for the GAN painting) has been experimenting with generative fashion.

Janelle Shane does this at scale every week across multiple use-cases.

This week a few different examples of using ML to design sneakers went viral.

3) Question Masters and Deep Divers in VC

I've been (over)analyzing VC profiles and strategies across a bunch of vectors recently. One of the recent thoughts that came from a fairly complex diligence process was the difference between the Question Master VC vs. the Deep Diver VC.

The Question Master (QM) excels at using questions as ammunition to push the founder to take all the moving parts of their business from complex → simple terms.

The Deep Diver (I know this name is awful but we’re going with it, DD) excels at understanding complex topics about the business in a short period of time based on prior work/experience.

In which area does each shine?

The QM will likely have a dominant filter on a founder's ability to sell (either to customers, the current fund's partnership, or later-stage VCs) and theoretically will be able to have a wider aperture on the scope of their investments. The QM also runs a lot less of a risk of bias due to entrenched thinking or bad past information. It's quite likely that the QM is an optimist and weighs heavily on founder profile at the early stage + pattern matching of more macro trends in the business/category.

The DD will perhaps be able to invest in companies that QMs are unable to fully appreciate the opportunity in and may be able to build credibility with the founder in a shorter period of time during a competitive process. The DD runs the risk of misevaluating their knowledge as an edge in their diligence process or drawing parallels that aren't correct without surfacing them to the founder. The DD also may misjudge the ability for a founder to raise follow-on capital.

I view myself as the latter and often put the onus on myself to get to a point of complex understanding of the founder and their business/technology. I believe this is best for me (at seed) because it pushes me towards the ability to understand and relate to a founder and their business on a deeper level than other investors can, in a shorter period of time, and specifically at a lower burden than the Question Master.

I make the distinction here of seed specifically because at this stage funds are competing on axes related to personal fit, ability to help, and often pace. My gut is that as price pushes into the equation more and pace less at Series B+ stages, the QM may be the dominant profile.

To be clear, I'm acting as if these two VCs can't possibly be one, which is untrue, but discussing gray area outliers isn't helpful for this thought process. In addition, many will argue it’s on the founder to be able to distill their information best. Again, gray area.

4) Are podcasts creating groupthink?

I've noticed a quite acute convergence of thoughts across various social circles recently and I think podcasts are to blame. While we suffer a form of groupthink within our social circles due to the written/visual media we consume, the internet has provided a level of content diversity that we often don’t spend the same amount of time reading the exact same types of things as our peers. Podcasts don’t feel like they have reached that point yet.

As podcasts have risen to prominence, they have filled in fairly similar timeframes for large groups of people (commuting, working out, etc.) while also becoming a main delivery point of information for non-professional knowledge. Because of the lack of programmatic discovery and diversity within the podcast ecosystem (especially within Apple’s main podcast app) I’ve noticed a convergence of thought related to various niche topics across pop culture, finance, tech, sports, and more. People’s opinions are always informed by information they gather, but when a large % of that information is coming from the same 5-10 podcasts, it’s remarkable how conversations become noticeable regurgitations of what you heard a few days ago on the subway.

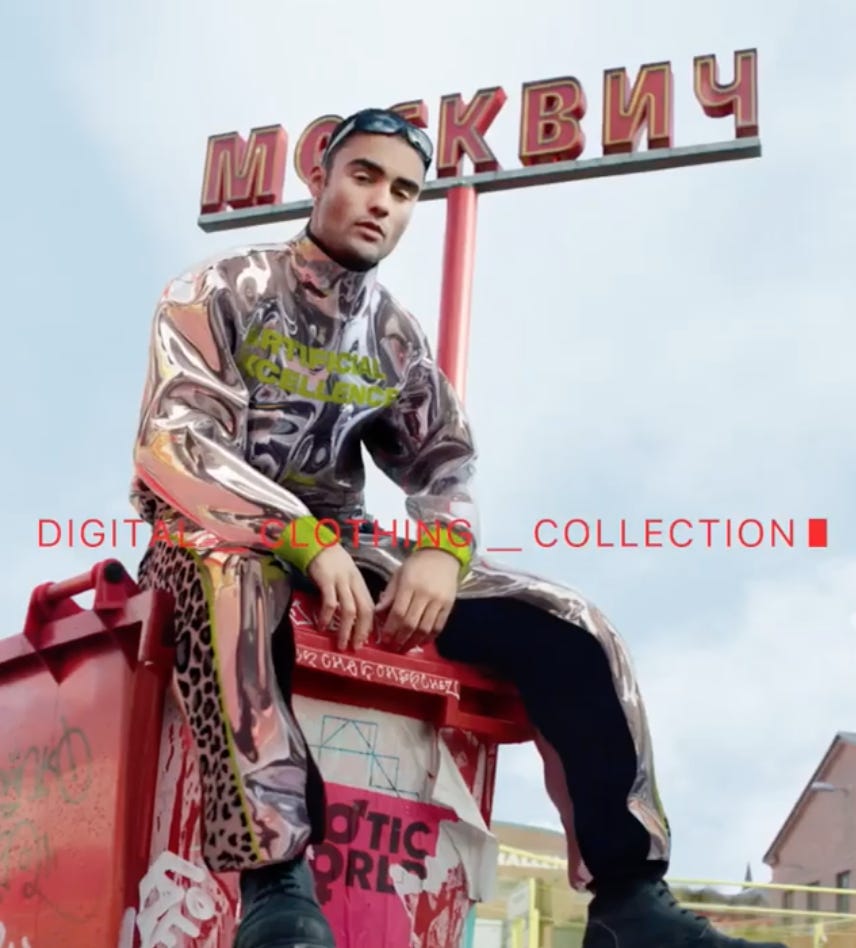

5) Digital fashion is coming soon and may be world-positive. (see paper commentary below)

A few papers this week

AR Costumes: Automatically Augmenting Watertight Costumes from a single RGB Image - This paper from Disney Research talks about utilizing a single RGB image to apply a "watertight" costume automatically in AR to a person. This is pretty interesting from a viewpoint of what the future of both our digital avatars could look like as well as what could be done with future AR filters. We're just starting to really figure out face tracking in a high-fidelity way without using depth sensors (Pinscreen's work here) , but full body tracking is still on the horizon as people get tired of being restricted to shoulders-up modification. The closest comp here for an existing consumer app today would be Octi.

Despite this lack of progress on the automation side, a digital celebrity named Perl just posted an intriguing video that speaks to a more manual version of this technology in order to allow Instagram users to waste less on fast fashion and instead digitally modify their pictures with single-use outfits. Incredible timing, and something I expect to see productized in the near future.

Photo Wake-Up: 3D Character Animation from a Single Photo - This paper from U Washington and Facebook researchers allows 2D images to auto-animate and come to life, which has implications for AR as shown in this supplementary video.

Truly Autonomous Machines are Ethical - This paper brought me back to my ethics class in college. It's a compelling (and a bit long) read on various ethical implications and decisions to be made around the treatment, liability, and programming of autonomous robots. I particularly loved this quote: “So, yes, there is risk in attempting to build an autonomous machine, just as there is risk in raising children to become autonomous adults. In either case, some will turn out to be clever scoundrels.”

Towards High Resolution Video Generation with Progressive Growing of Sliced Wasserstein GANs

Combatting Adversarial Attacks through Denoising and Dimensionality Reduction: A Cascaded Autoencoder Approach - I’m a strong believer that defending ML models against adversarial attacks is a core component to the future of machine learning.

If you have any thoughts or feedback feel free to DM me on twitter.